Have you ever wondered how the brain remembers places, people, conversations even after they happened long ago? How do we learn a new language? How do we make decisions during a crisis? All these processes — learning, memory, perception, attention, judgment, problem solving, and decision-making — are collectively known as cognition in neuroscience.

Traditionally, researchers have tried to manipulate observable behaviors like learning and memory using animal models to understand how cognition works. In the 1800s, researchers employed Galen’s approach (lesion method) to map behavior to specific regions of the brain. If the damage of a brain region leads to impairment of task performance, it is assumed that they are correlated. The discovery of the X-rays improved this approach by its ability to locate brain damage in live patients. But this approach has limitations as we cannot study the exact function of the damaged brain region; we could only understand how the brain performs without that. Electrophysiological and single-cell recording methods using animal models revolutionized the field of cognitive neuroscience. Later, the advent of brain imaging techniques like electroencephalography (EEG), positron emission tomography (PET), and functional magnetic resonance imaging (fMRI), made it easier to study these processes in humans in a non-invasive manner. These techniques generated piles of data and posed a need for higher analyzing capacity — thanks to computational models for saving our time and manpower.

Computational modeling follows a set of rules defined by researchers to analyze the data and replicate the experimental output. This could potentially help us generate data without using an organism and predict how the system will behave under certain conditions. In more specific terms, computational modeling is the integration of mathematics, physics, and computer science. Every process has several parameters or conditions and limits within which it works. Computational models use these parameters to imitate the process. Dr. Thomas Troppenberg, a computational neuroscientist at Oxford University, defined computational neuroscience as the theoretical study of the brain used to uncover the principles and mechanisms that guide the development, organization, information-processing and mental abilities of the nervous system.

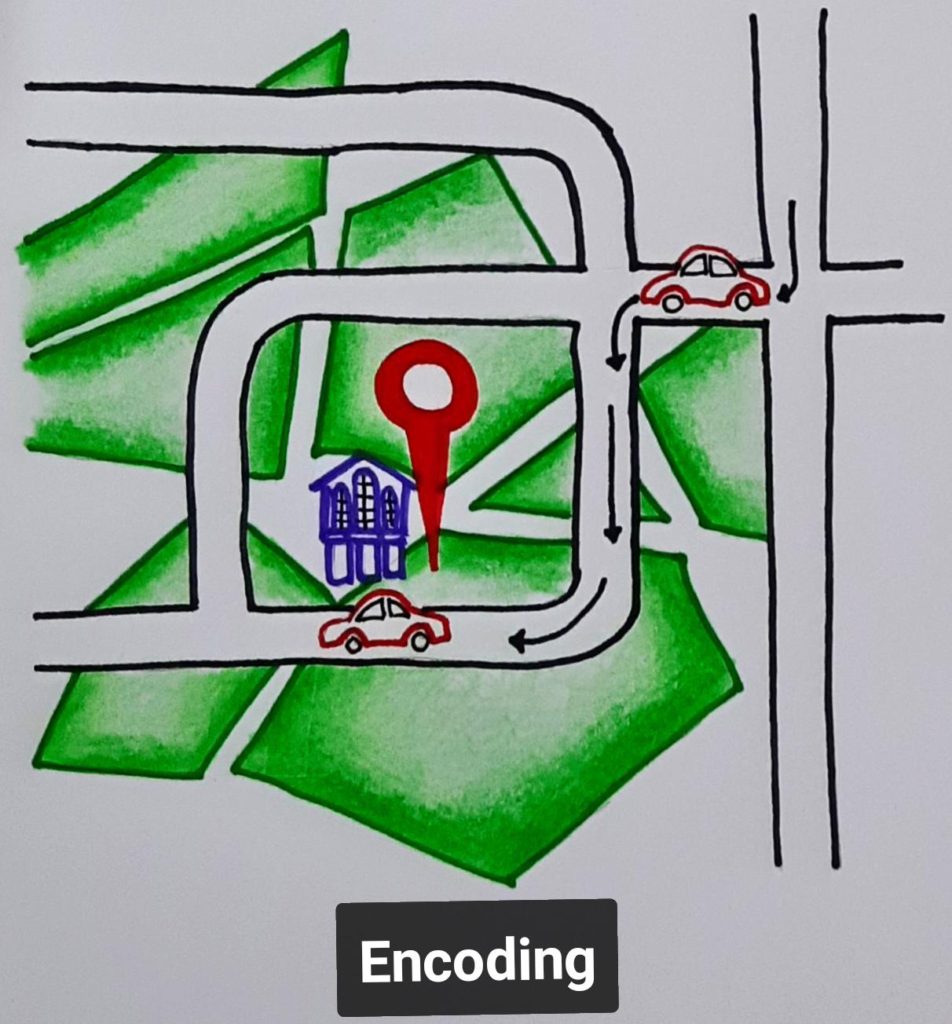

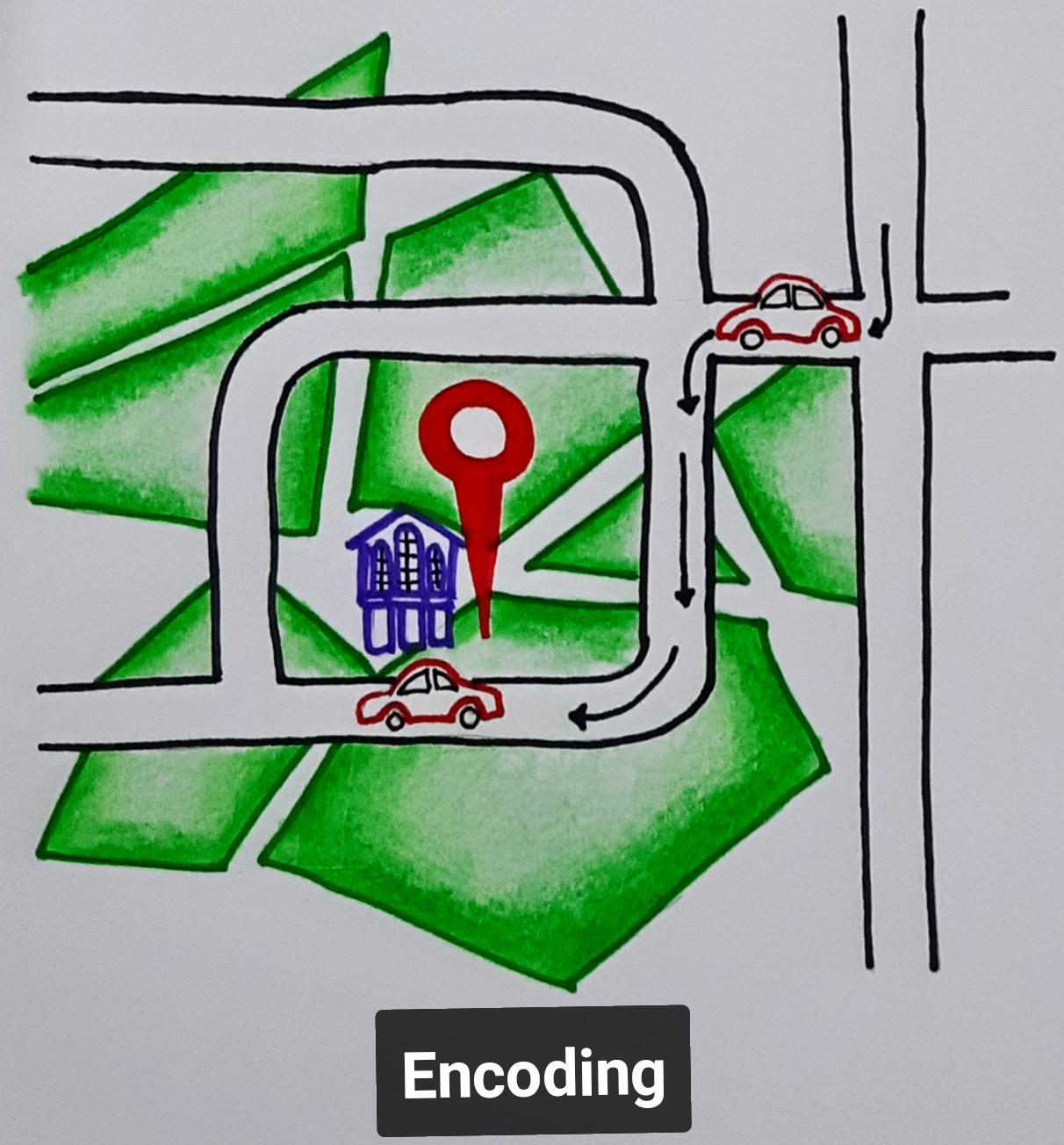

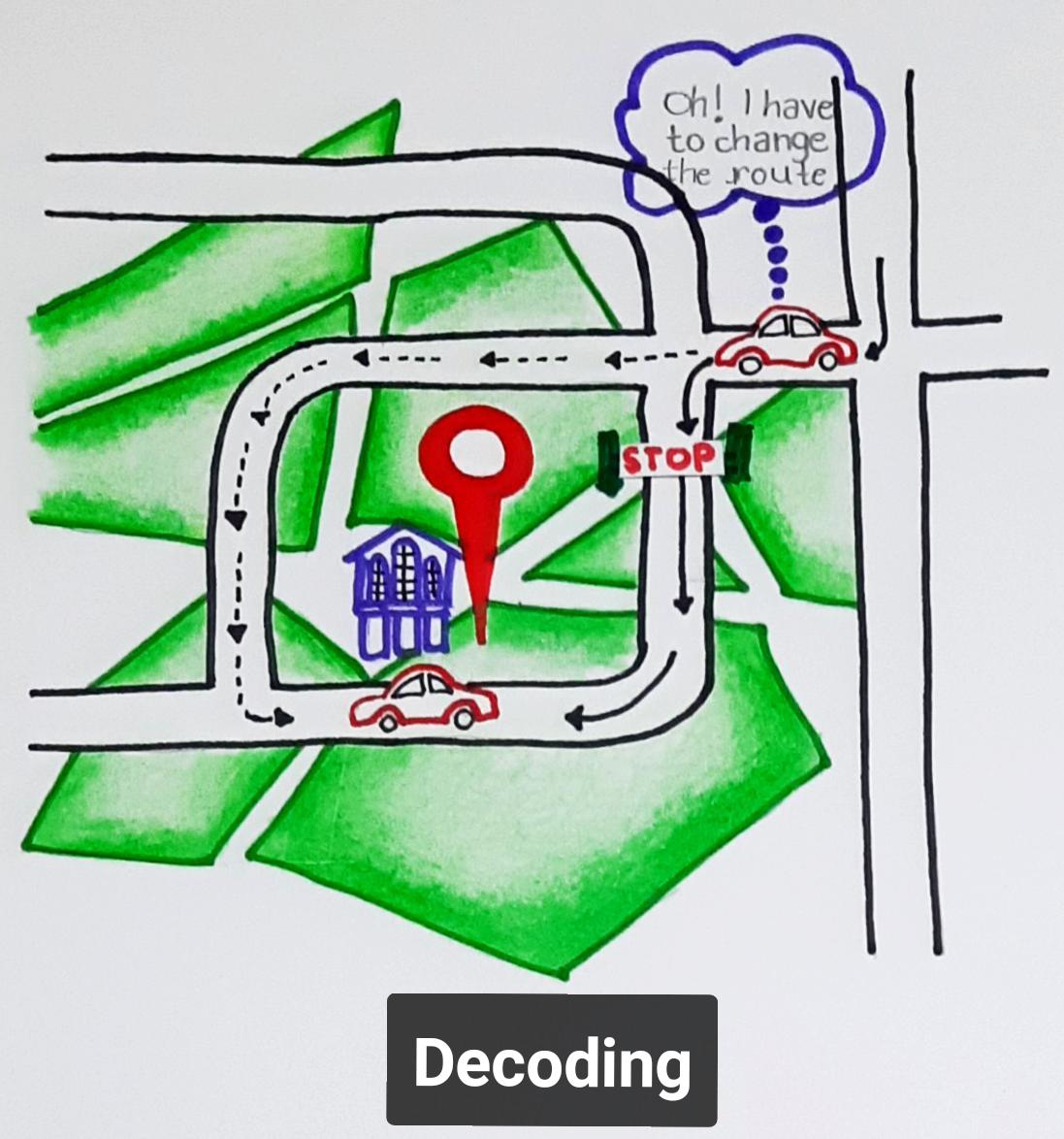

We could use different languages to describe a facial expression. We could write ‘smile’, ‘muskurahat’ or ‘haasi’. Similarly, the nervous system employs multiple ways to represent the information in the brain in its language. Encoding and decoding are two processes used in information processing. These processes together explain how neural circuits store and convey information. Encoding translates the information to the brain’s language or codes. It could be represented by the firing rate, firing pattern, and/or time difference between firing. Decoding is the opposite process (not absolutely) used to translate the neural code into environmental stimuli that represent it.

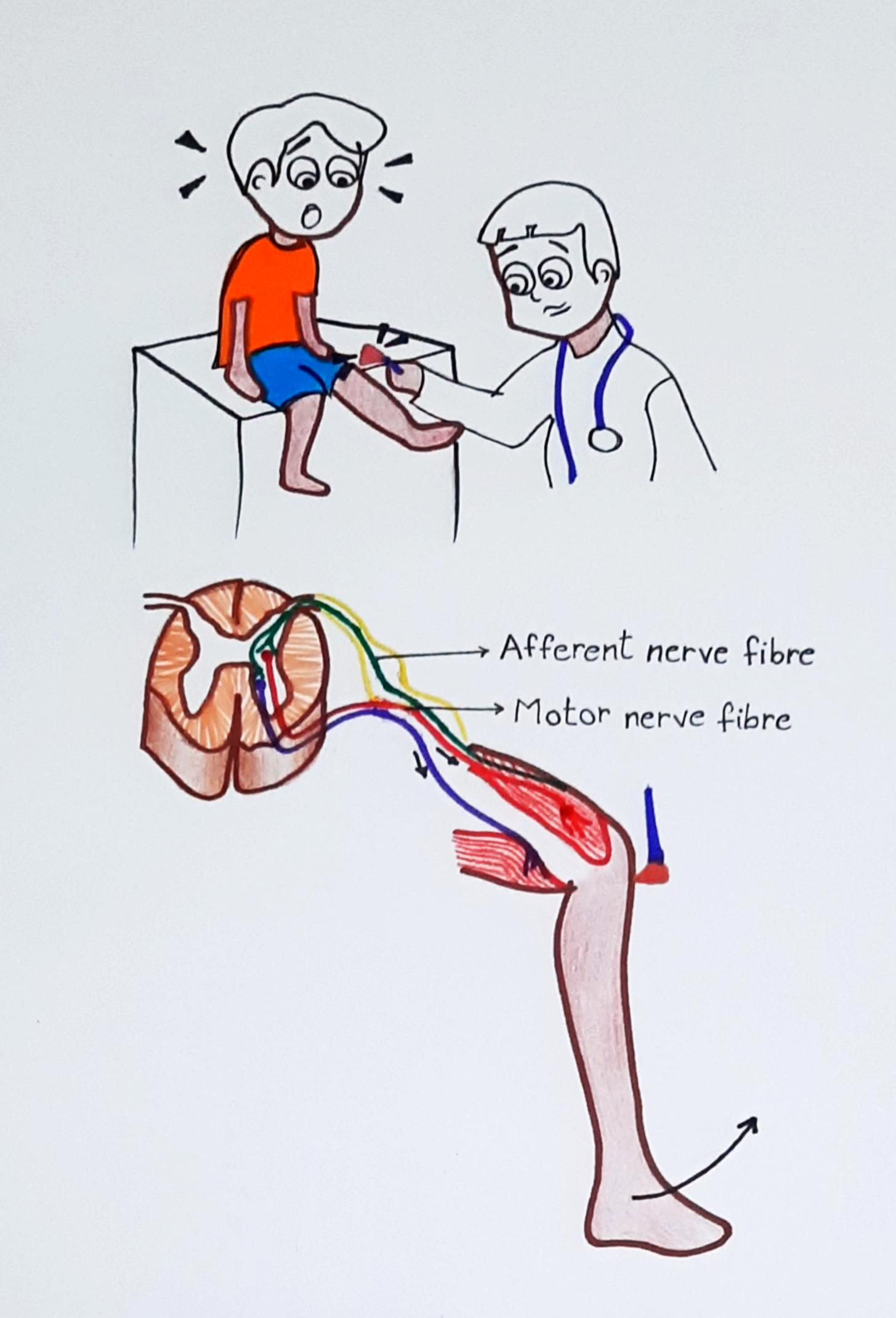

Let us have a look at an example of a simple neural circuit: the stretch reflex.

The reflex is the knee jerk response when the doctor taps your knee. Here, the tap causes the muscle stretching and the mechanical receptors to activate. They create action potentials (electrical impulses) which are carried away to the spinal cord by neurons. Spinal nerves release chemicals called neurotransmitters to inform the muscle that it needs to contract. Here, the change in the muscle length is the information representation of the stimulus, i.e. the tap you received. This information is encoded by the firing rate. In this case, faster firing implies faster stretch. Motor neurons need to decode this information and respond. When the firing rate of sensory neurons is high, the depolarization of motor neurons is high, and that causes them to fire more, thereby informing the muscles to contract. (Adapted from the book “From Molecules to Networks”)

The distinction between encoding and decoding can be seen using the Bayes Theorem. It helps to connect the probability of an event in the presence of some known information or chance.

Probabilistic models are widely used to model cognitive processes. Probability describes how likely it is that one event will occur. The simplest way to understand probability is the coin example. Assume that we have a coin that is even on both sides (unbiased). Then the chances of getting a head or tail when we toss are equally likely or probable. What if the coin has more weight on the head side? Then the chances of getting a head are more probable than the tail. Here, getting a head is an event. If we toss the coin 100 times with the unbiased coin, we will mostly end up with 50 heads or something closer to it. Probability assigns a number between 0 and 1 to help us easily control it when we model. In our unbiased coin example, the probability of getting a head is 50 out of 100, which is 0.5.

Encoding and decoding are termed forward and backward models respectively. Encoding models are also known as causality models as they connect stimuli to brain activity. Decoding models, on the contrary, helps to reconstruct the stimulus from brain activity. These are also known as predictive models.

To explain Bayes theorem, suppose you have a room where either your aunt or an elephant could be present. Since it is inside your house, the probability that it is an elephant is poor. Bayes theorem predicts the chances of your aunt being inside the room, given it is your home. Let’s see how neural codes employ this. Our brain knows from previous experience or evolutionary hardwiring that it is more likely to be your aunt, so the brain comes up with a code to identify it as your aunt. What if it’s an elephant? Then the brain has to come up with a whole new unique code for an event that has a lower probability. (Adapted from the book “From Molecules to Networks”)

This predictive modeling helps to bridge between neural activity and complex environmental stimuli. But some pitfalls come inherently at multiple levels, such as feature selection, model extraction, model representation, and experimental design. Understanding these drawbacks and improving them with the advancement of technology or collaborative efforts helps evolve these models. There is no perfect model to describe these processes, instead, we have the best models that update and evolve to accommodate new information.

Acknowledgements: Bharati Venkatachalam, Sveekruth Pai

Sources:

- Byrne, J. H., Heidelberger, R., Waxham, M. N., & Roberts, J. (2003). From Molecules to Networks. Elsevier Inc. https://doi.org/10.1016/B978-0-12-148660-0.X5000-8

- Holdgraf, C. R., Rieger, J. W., Micheli, C., Martin, S., Knight, R. T., & Theunissen, F. E. (2017). Encoding and Decoding Models in Cognitive Electrophysiology. Frontiers in systems neuroscience, 11, 61. https://doi.org/10.3389/fnsys.2017.00061

. . .

Writer

Thasneem Musthafa

She is an alumna of the Indian Institute of Science Education and Research (IISER), Pune. She is a biology major interested in exploring neuroscience, behavior, and epigenetics. She strongly endorses mental health advocacy, equality, and accessibility.

Illustrator

Shruti Morjaria

Shruti Morjaria is a self-learnt science artist and just another papercut survivor! She is completing her degree in cell and molecular biology. While she juggles between work and life, creativity keeps her sane. She says, “All you gotta do is be a passionate scribbler and see how creativity overflows!”